The Math and Code Behind…

Facial alignment is a prereq…

2 years, 10 months ago

by Sabbir Ahmed

Head pose estimation is used widely in various computer vision applications- like VR applications, hands-free gesture-controlled applications, driver’s attention detection, gaze estimation, and many more. In this post, we are going to learn how to estimate head pose with OpenCV and Dlib.

In computer vision, pose estimation specifically refers to the relative orientation of the object with respect to a camera. The reference frame here is the field of the camera. Pose estimation often referred to as a Perspective-n-Point problem or PnP problem in computer vision. The problem definition is simple, I will try to simplify this with bullet points, Given a set of 3D Points in a world reference and corresponding 2D image taken by the camera —

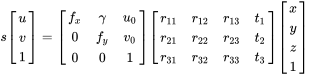

Let’s examine the definition of the problem as an equation —

The left side of the equation s[u v t]ᵗ denotes the 2D image taken by the camera. The right side of the equation, the first potion which looks like an upper triangular matrix is our camera matrix where f(x, y)is the focal lengths γ is the skew parameter which we will leave as 1 in our code. (u₀, v₀) are the center of our image. The middle portion, r and t represents rotation and translation, the final portion denotes the 3D model of the face which we will see in a bit. For now, we will leave our theory and maths in their grace.

Get The Code

Understanding The “WHY and How!?”

It is obvious that our application needs to detect face and predict shape to solve PnP and eventually estimate pose. For detecting faces and predicting shapes we will use dlib.

# use models/downloader.sh

PREDICTOR_PATH = os.path.join("models", "shape_predictor_68_face_landmarks.dat")

if not os.path.isfile(PREDICTOR_PATH):

print("[ERROR] USE models/downloader.sh to download the predictor")

sys.exit()

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(PREDICTOR_PATH)

We are now set to solve PnP. But before that, one last time from the equation above we need

to solve PnP and get the rotation and translation of the image(face in our case). Let’s code the prerequisites —

def ref3DModel():

modelPoints = [[0.0, 0.0, 0.0],

[0.0, -330.0, -65.0],

[-225.0, 170.0, -135.0],

[225.0, 170.0, -135.0],

[-150.0, -150.0, -125.0],

[150.0, -150.0, -125.0]]

return np.array(modelPoints, dtype=np.float64)

def ref2dImagePoints(shape):

imagePoints = [[shape.part(30).x, shape.part(30).y],

[shape.part(8).x, shape.part(8).y],

[shape.part(36).x, shape.part(36).y],

[shape.part(45).x, shape.part(45).y],

[shape.part(48).x, shape.part(48).y],

[shape.part(54).x, shape.part(54).y]]

return np.array(imagePoints, dtype=np.float64)

def CameraMatrix(fl, center):

cameraMatrix = [[fl, 1, center[0]],

[0, fl, center[1]],

[0, 0, 1]]

return np.array(cameraMatrix, dtype=np.float)

The six-coordinate points of ref3Dmodel() and ref2DImagePoints() refers to nose tip, chin, left corner of the left eye, right corner of the right eye, corners of the mouth. You can visualize the whole points and add them as you want by manipulating Visualize3DModel.py.

OpenCV provides two simple APIs to solve PnP

solvePnPsolvePnPRansacIn our case, we will use solvePnP. By convention, this API needs 4 input parameters —

ref3Dmodel()ref2DImagePoints()cameraMatrix()np.zeros(4, 1)by resolving PnP the API returns success message, rotation vector , and translation vector matrix.

face3Dmodel = world.ref3DModel()

refImgPts = world.ref2dImagePoints(shape)

height, width, channels = img.shape

focalLength = args.focal * width

cameraMatrix = world.cameraMatrix(focalLength, (height / 2, width / 2))

mdists = np.zeros((4, 1), dtype=np.float64)

# calculate rotation and translation vector using solvePnP

success, rotationVector, translationVector = cv2.solvePnP(

face3Dmodel, refImgPts, cameraMatrix, mdists)

The focal length of the camera must be calibrated as it is an intrinsic property of the camera hardware.

Getting the Euler Angles

Finally, we can easily extract the roll, pitch, and yaw. In other words, we have to extract Euler Angles by RQDecomp3x3 API of OpenCV. But it needs a rotation matrix rather than a rotation vector. Fortunately, we already have calculated the rotation vector using solvePnP. There is another API of OpenCV called Rodrigues which converts a rotation matrix to a rotation vector or vice versa. This is how we can implement this —

# calculating angle

rmat, jac = cv2.Rodrigues(rotationVector)

angles, mtxR, mtxQ, Qx, Qy, Qz = cv2.RQDecomp3x3(rmat)

The final output should look something like this —

Sample Head Pose Estimation

Concluding with Summary

We started detecting and predicting the shapes of a face. Then we calculated rotation and translation vector with solvePnP. Finally got the rotation angle with RQDecomp3x3. Yet another easy 3 steps process. Computer vision is fun.